uk4b

Metadata Pretraining Towards Instruction Finetuning

We pretrain unidirectional language models on 4B tokens from UberText 2.0. We enrich document text with weakly structured metadata, such as title, tags, and publication year, enabling metadata-conditioned text generation and text-conditioned metadata prediction at the same time. We pretrain GPT-2 Small, Medium, and Large models on a single GPU, reporting training times, BPC on BrUK, BERTScore, and BLEURT on titles for 1000 News from the Future.

Install haloop to access the model: https://pypi.org/project/haloop/

See video on metadata pretraining (2m33s):

Model checkpoints are available at https://a.wilab.org.ua/gpt/. BLEURT/BERTscore evaluation on News from the Future is available on lang-uk/bleurt_eval

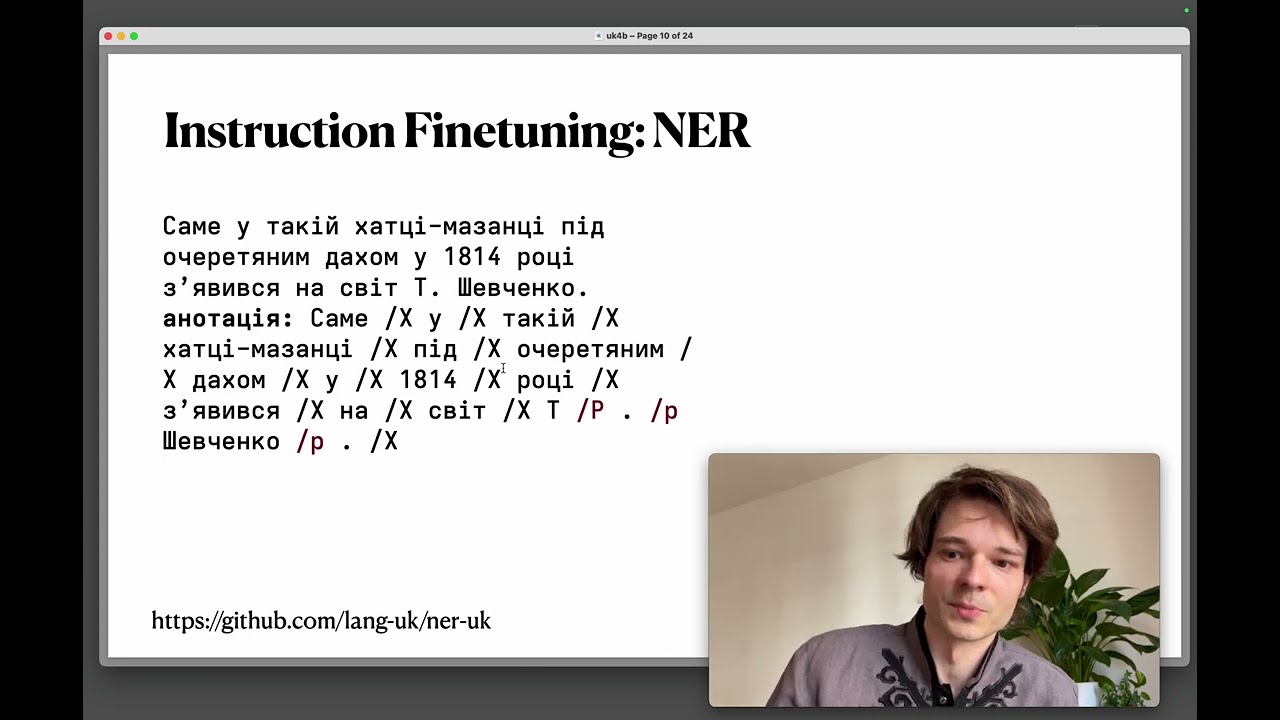

Next, we venture to formatting POS and NER datasets as instructions, and train low-rank attention adapters, performing these tasks as constrained text generation. See video (2m50s): https://www.youtube.com/watch?v=NDXJ9hXtf-o

See video on instruction finetuning (2m50s):

See POS and NER adapters can be trained using examples/Makefile.

This repository fuses karpathy/NanoGPT and asivokon/unlp-2023-shared-task

Authors:

- Volodymyr Kyrylov @proger

- Dmytro Chaplynskyi @dchaplinsky

Erratum

When reporting BPC results in the UNLP paper, we make a mistake switching to the log-2 base. True measurements are larger by a factor of ~2.08. The correct measurements are reported in commit 1c5dc381. The updated table is available in the preprint https://wilab.org.ua/uk4b.pdf.